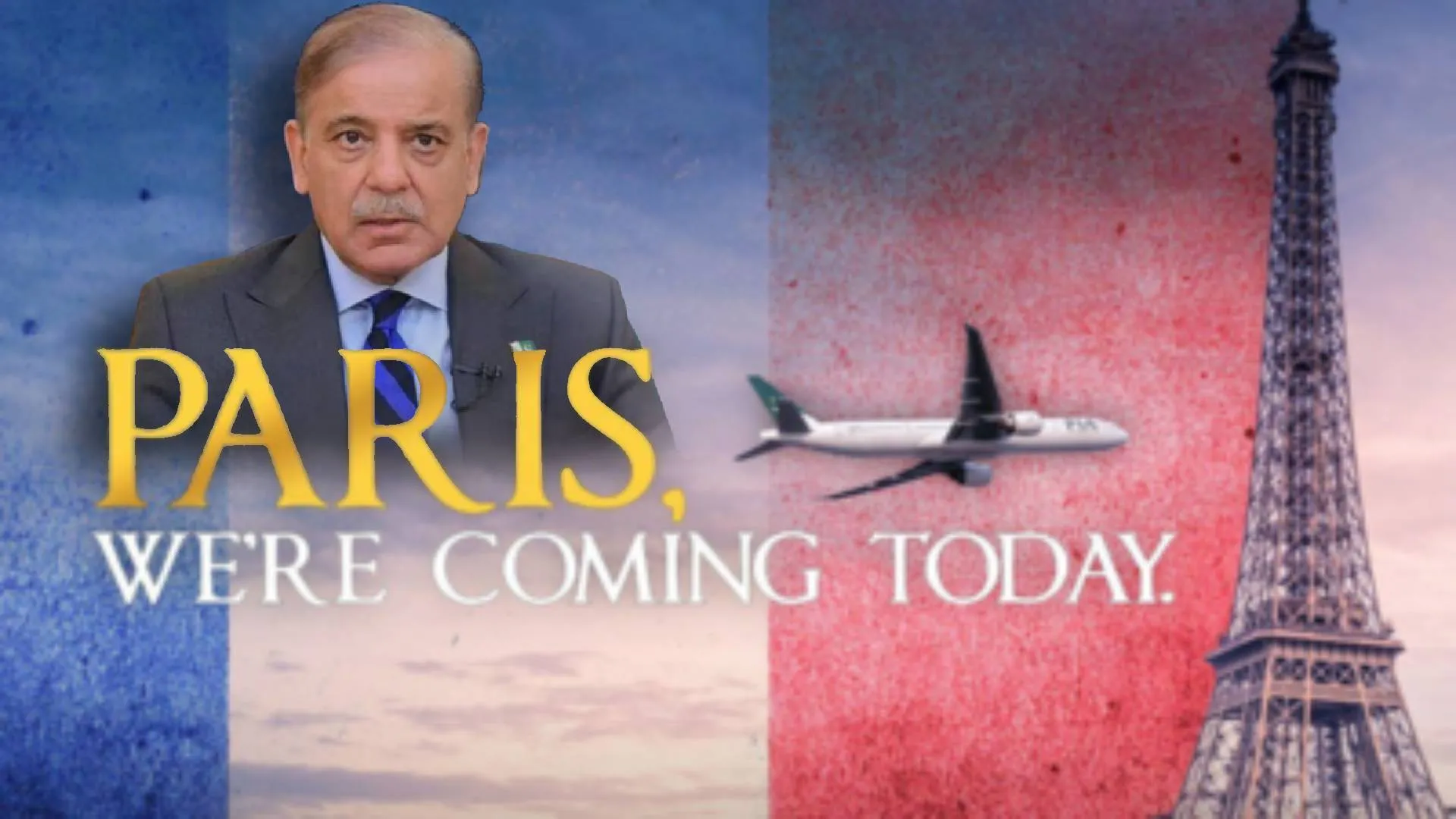

A manipulated video using Deepfake technology of Congress chief Kamal Nath from Madhya Pradesh recently went viral, causing scepticism over the future course of the state government’s Laadli Bhehna Scheme. The doctored video reportedly showed Kamal Nath saying that the Congress, as soon as it forms the government, ‘will put a stop to the Ladli Bhehna scheme’ – and women will stop getting the cash benefit. This is an example of how deepfakes are used to fabricate incidents or speeches that never occurred, leading to the creation of controversies and discord within the electorate.

Similarly, in May 2022, a fake and heavily manipulated video depicting Ukrainian President Volodymyr Zelenskyy circulated on social media. The video, which shows a rendering of the Ukrainian president appearing to tell his soldiers to lay down their arms and surrender the fight against Russia, is a so-called deepfake that ran about a minute long.

What Are Deepfakes?

As the name suggests, Deepfakes are digital media, video, audio, and images, edited and manipulated using AI (Artificial Intelligence). The deepfake video is the most commonly seen example among other varieties of deepfake techniques, in which the face of a person in the video is swapped with another person’s face. This damages a candidate’s reputation by making it appear as though they are engaging in some unethical or illicit activities.

Repercussions Of Deepfake Tech

Much like other countries, India is also grappling with the risky repercussions of rapidly advancing AI Technology. The growing use of deepfake technology is one of them as it poses a direct threat to personal security and the right to privacy. Deepfakes have the ability to imitate biometric data, which may fool systems that depend on face or voice recognition. Anyone can be vulnerable to a deepfake scenario. Consider a situation where an unexpected caller urges you to transfer money – a request you might typically reject. Now, imagine if the voice mimicked during the call is that of your father or mother, created through deepfake technology. The clear potential for fraudulent activities becomes evident in such cases.

The European Union’s Ai Act

Recently, the EU (European Union) came up with a notable legislative initiative- Artificial Intelligence (AI) Act. The proposed regulation seeks to ensure the safety and transparency of AI systems placed on the European market and used in the EU and at the same time respect fundamental rights and values of the EU.

One of the distinctive strengths of the EU AI Act is its “Risk-based Approach” i.e. higher the risk, the stricter the rules.

The compromise agreement provides for a horizontal layer of protection, including a high-risk classification, to ensure that AI systems that are not likely to cause serious fundamental rights violations or other significant risks are not captured. AI systems presenting only limited risk would be subject to very light transparency obligations, for example disclosing that the content was AI-generated so users can make informed decisions on further use.

For some uses of AI, risk is deemed unacceptable and, therefore, these systems will be banned from the EU. The provisional agreement bans, for example, cognitive behavioural manipulation, the untargeted scraping of facial images from the internet or CCTV footage, emotion recognition in the workplace and educational institutions, social scoring, biometric categorisation to infer sensitive data, such as sexual orientation or religious beliefs, and some cases of predictive policing for individuals. The EU AI Act can set a good example for AI regulation and governance globally.

India’s Position In Ai Regulation

But unlike the European Union, India lacks dedicated and specific legislation related to AI governance to address deepfakes and AI-related crimes.

India’s laws have to be in line with international norms and practices in order for the country to participate in the global digital ecosystem.

In India, the de facto basis for digital governance is the Information Technology Act, 2000. It was drafted at a time when the internet was in its infancy. However, the 23 year old IT Act is out of step with the reality of current technology challenges and has to be updated in order to re-evaluate the social effects of digitization and ensure that the governance framework upholds the rule of law and people’s fundamental rights.

The Digital India Act, 2023

In the light of the above, the recent announcement of the Digital India Act, 2023 (DIA), is yet to replace the two-decade-old Information Technology Act of 2000 (IT Act), it is intended to address the challenges and opportunities presented by the rapid growth of the internet and emerging technologies. The primary motivation behind the DIA is to bring India’s regulatory landscape in sync with the digital revolution of the 21st century.

Way Forward

To sum up, Artificial Intelligence (AI) has seamlessly fused itself into the modern society, reshaping various sectors and aspects of human life. As the high-speed evolution of AI continues, the importance of responsible development and deployment has reached a new tipping point. It’s paramount to create dedicated and specific laws related to AI governance and regulation.

But in the wake of the above, AI regulation and governance in India cannot be just confined to drafting laws, but also fostering a culture of digital literacy where citizens are educated about their rights, responsibilities and ethics in developing and using AI Technology.

Dr Fauzia Khan, Member of Parliament, Rajya Sabha and former Minister of State for GAD, Education, Health, and WCD in the Government of Maharashtra

Shruti Saxena, Pratham Ti Fellow.